This algorithm is a photo editor for 2 decades of archived pictures

In the digital age, photo editors can easily face image collections that number in the hundreds of thousands or millions. They simply don’t have the time to sort through every picture and evaluate its quality. Computer algorithms may be able to lighten the load of these media editors, giving them more time to devote to higher-level activities.

One idea about how to implement this sort of software assistant is to create a filter or set of filters that could cut through some of the noise by identifying the kinds of images that won’t ever get used from those most likely to be published. Such a filter would need to identify the key qualities in a photograph that signal its publication value. Exposure and sharpness might be a first step. Other filters to assign a value to facial expressions, peak action, beauty, or story telling – the aesthetics – would help more advanced kinds of evaluation.

Recently, our team of journalists and computer scientists at the University of Missouri set out to see if we could use these algorithms, collectively known as artificial intelligence (a.k.a “AI”), a means of teaching computers to “think” more like a human on repetitive tasks, to evaluate 22 years worth of photojournalistic images at the Columbia Missourian. This daily community newspaper staffed by School of Journalism students and edited by faculty has a collection approaching 2 million with varying levels of image quality and metadata. This catalog of still images, along with some video content, now occupies about 26 TB of storage. The storage space needed increases year over year.

Missourian photo editors routinely scan through many images in search of the right one for a particular story. Repetitive, laborious and time-consuming sorting work is made more difficult by the sheer volume. A way to cut through the clutter and streamline the selection process is needed.

Our team’s aim is to identify high-quality images that could prove useful in current and retrospective stories, and low-quality images to consider storing on less expensive systems or deleting. We started out by asking some computer science students to begin working on this challenge as part of a software engineering class project for junior and senior level computer science students. For computer scientists, a type of software called machine learning promises to solve this kind of problem, and the students were eager to test their skills with a real-world problem.

Machine learning comes in two forms: Supervised and unsupervised. Supervised machine learning creates a computational model of the aesthetic, technical, and subjective value of a particular image based on “training data,” which is added by real people who tag, rate, and classify each photo according to their standards. Humans basically tell the computers how to assign values to pictures in order to “train” them. The computers then identify digital patterns among similarly-rated photos. If enough humans rate enough photos, a fairly accurate software model can be created from that data. Then that model is used to evaluate a larger collection of photojournalistic images by comparing the digital patterns in the model with a new set of photos.

Unsupervised machine learning, sometimes referred to as artificial intelligence, identifies patterns based solely on a computer algorithm’s assessment of image similarity, and generates models used to identify these categories in other photo collections.

Our first attempt to evaluate Missourian images used supervised machine learning with a model built from images in a public collection of photographs. Using this model, with its limited subject range, and no photographer or editor ratings of photojournalistic images did not work well – ratings were mostly in the middle, for example, a range of 4-6 on a scale of 1-10. We needed to find and begin to develop training data from photographs more similar to our collection of news-related images.

Models used in machine learning are not “good” or “bad.” They can be more useful for some purposes, less useful for others. For example, Google created an image evaluation model using 300,000 photos from a contest. Each photo had one or more ratings from participants in the contest, and those scores generated a computer model representing the ratings and opinions of individuals with a wide range of experience taking photographs. If photojournalists did any of the rating, their participation was incidental. The resulting model does well predicting quality from the perspective of the general public, which is a step in the right direction. If we want a model that works for photojournalistic purposes, we may need to add another layer to our model or develop another model entirely.

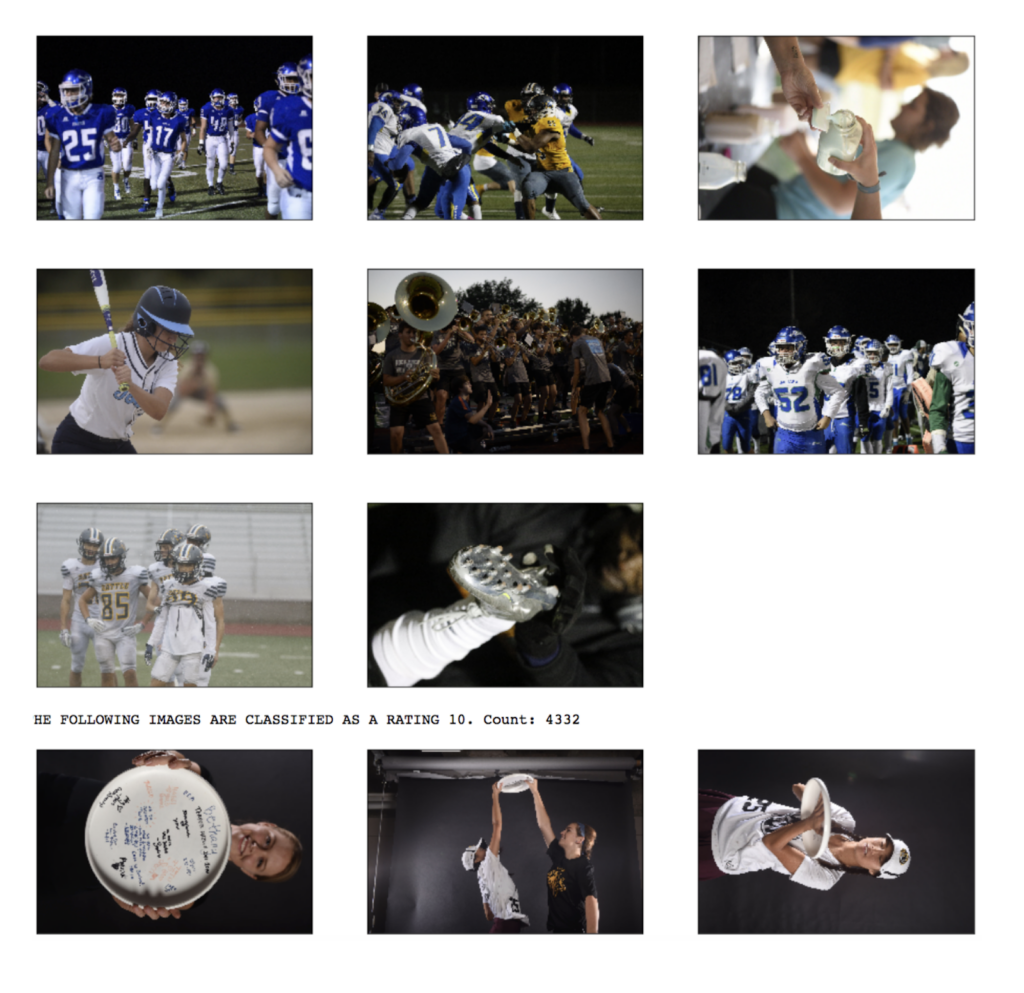

Here are some examples of highly-rated photos as processed by the AI algorithms:

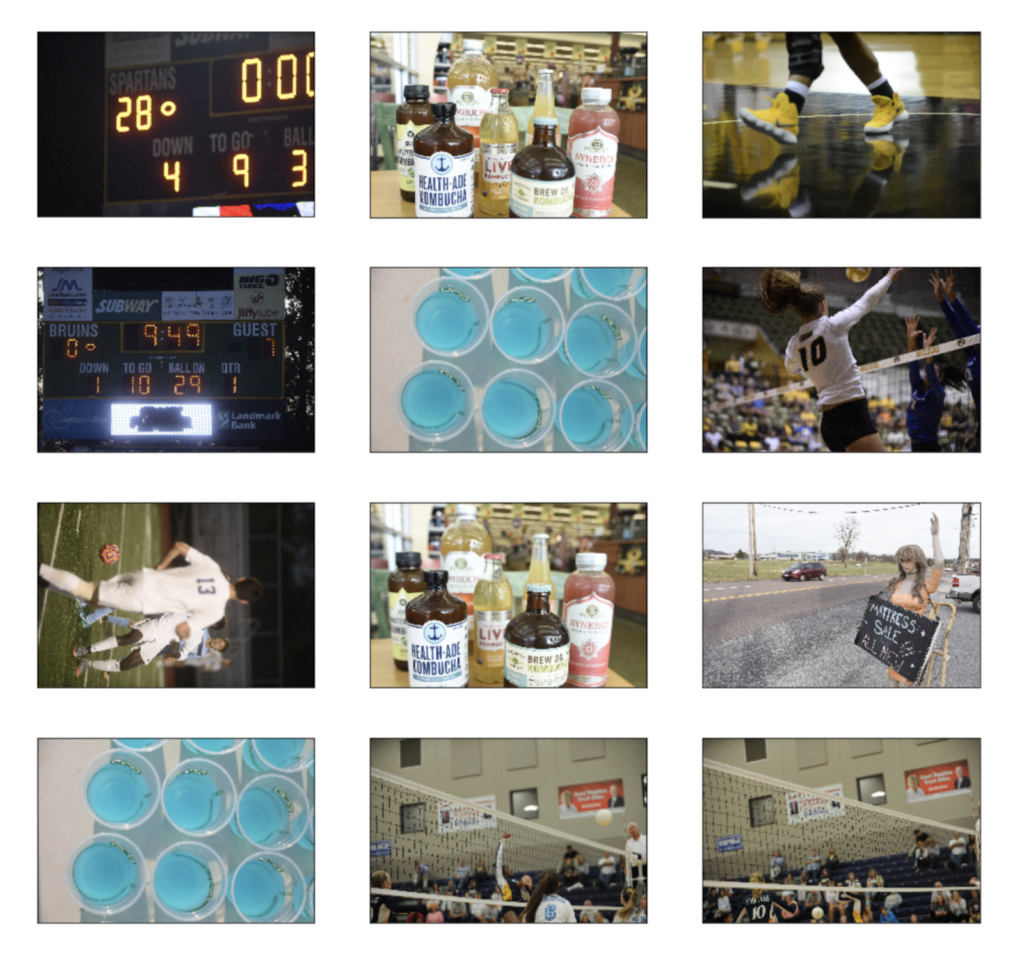

Same examples of low-rated photos as shown by the AI filters:

Photojournalists often take a large number of photographs at sporting events. For example, The Missourian has an over-abundance of football images. Artificial intelligence algorithms can identify highly similar photographs in collections like these. If we could eliminate some of those duplicates, just the ones we don’t really need, we could save the time an editor needs to look at and evaluate them. This would also save storage costs. It also probably would provide opportunities for student photojournalists to see that a large percentage of the photos they take are redundant.

Another model, called AVA, uses evaluations from professional photographers on a set of around 255,000 photographs, focusing on technical and aesthetic quality. The images in our collection also have signals of quality, often in the embedded metadata (data about data). By examining the IPTC metadata for the images, we can find key indicators of quality such as if an image was used in a publication, ratings from the photographer or editor, caption information and other details. Each of these models has some utility for rating our collection.

Why use a single machine learning algorithm when you can integrate several, each with its own perspective on the photographs value? A computer scientist can “stack” or sequence these models to help adjust or refine the results of their experiments. The current experiment utilizes three models: Google, AVA, and our own ratings.

The results of our testing thus far do a good job of identifying keeper images, and the shots that can be eliminated. Notably, the number of images that the computer algorithms say can be trashed (a score of 1 or 2 out of 10) is 15,319 out of a total of 76,505 in the test set. There are a total of 13,563 “keepers” (a score of 9 or 10) in the test set. Of course the amount of space each photo consumes from year to year is different because every new generation of camera saves a larger file, but the complete collection currently uses 26 Terabytes (2,600 Gigabytes) of storage space. On a collection of 2,000,000 photos, 20% (15,563 out of a total of 76,505) could be trashed, reducing the required storage space by 5-6 Terabytes to around 20, a reduction of six Terabytes. The annual cost of keeping one terabyte of storage in a datacenter, including equipment, staff, and backup is estimated by our team at $6,000/year.

In addition, if the collection grows without periodic “gardening,” capital equipment costs ranging from $40,000-$60,000 could be required every 2-3 years if the space each photo consumes, and the number of photos taken, continue to grow at current rates.

The work we’ve done thus far has been accomplished on standard computing devices. For the next steps, we have copied the digital photos over to a computer that uses Graphical Processing Units (GPU’s) that can run the machine learning algorithms much faster. This will allow us to evaluate more images and to adjust the models so that they are more accurate.

We also plan to begin evaluating the similarities within groups of photos from the same event such as a particular football game or city council meeting. This should give us another level of analysis with which to filter out the images we don’t need from the ones more likely to be published in the future.