How to verify videos

Videos can present a difficult challenge for verification when you aren’t receiving them from a trusted source

The views expressed in this column are those of the author and do not necessarily reflect the views of the Reynolds Journalism Institute or the University of Missouri.

I’m constantly finding and experimenting with new tools for journalists that come across my desk. My current testing table includes API tools, VPNs and a QR code generator. But one task that seemingly every newsroom needs to tackle is to be able to verify contributed videos.

Videos present a steeper challenge than photos for a variety of reasons. They consist of a long series of images, not just one static image, so finding the origin isn’t as simple as a reverse image search or looking at the metadata in Photoshop. And they consist of multiple components and layers including audio, faces, voices, backgrounds, frames and timecodes. Any of those components could be fake or manipulated.

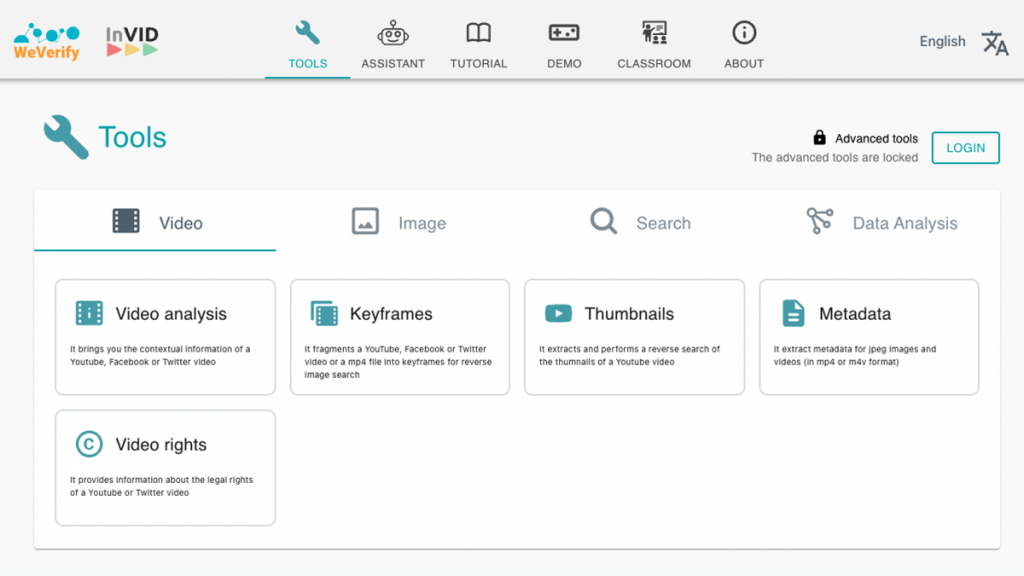

The first tool I would recommend for tackling these challenges is called InVID. It was created by a consortium of media and tech organizations in Europe, and comes highly recommended by Bellingcat, a world-famous open-source investigation unit. Even Amnesty International, a global human rights nonprofit, uses it to investigate videos of human rights abuses.

InVID, which is short for “In Video Veritas,” unspurls an enormous list of facts and figures for whichever video you plug in. It contains metadata like upload dates, thumbnails, internal descriptions, keyframes, reverse image search and more. You can find a full list of the platform’s many features on the project’s website. I can see why Amnesty International calls this tool the “Swiss Army knife” of digital verification.

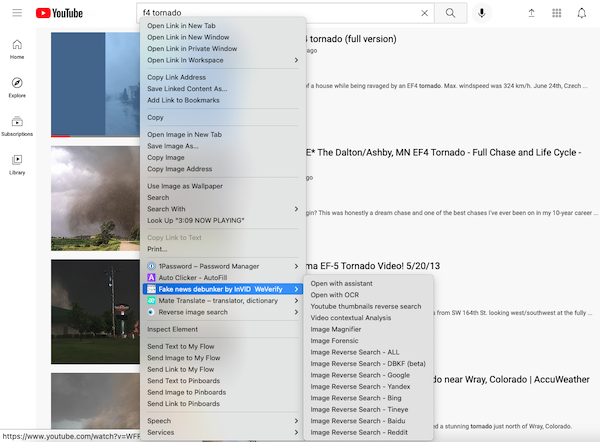

These data points are available on InVID’s very detailed dashboard, but the dashboard is most easily accessed through the tool’s Chrome or Firefox plug-in. Once you have installed the plug-in, you can either open the dashboard on a new tab, or right click on a video or image to run some simpler verification tasks.

The extension was designed for Chrome or Firefox, but it can also be installed on Opera or Microsoft Edge thanks to their browser extension-extensions. Unfortunately, despite being one of the most popular browsers in the world, Safari does not offer a lot of these kinds of add-ons.

If you install the browser extension, though, you can run a video through the InVID vetting process with a simple right-click.

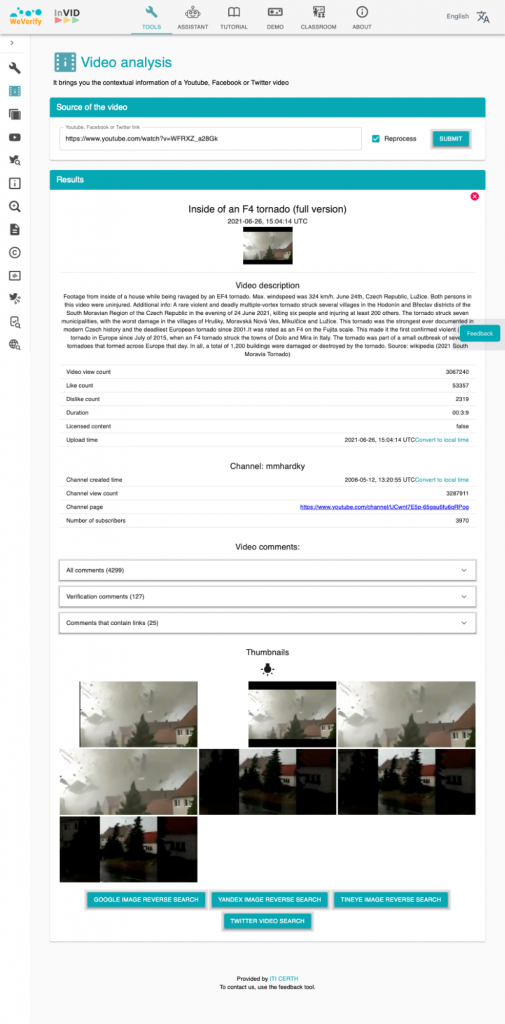

As an example, I ran through a YouTube video claiming to show a strong tornado ripping through Lužice in the Czech Republic on June 24 (of which year is not specified). The YouTube description contains a detailed summary of the storm and even cites a source: Wikipedia.

A news search returned a reliable source, the Reuters news agency, claiming that a tornado really did hit the Czech Republic on June 24 of this year. The InVID dashboard shows the video was uploaded to YouTube (not recorded!) at “2021-06-26, 15:04:14 UTC,” meaning almost two full days after the storm hit. From the combination of these two trustworthy sources, Reuters and InVID, we know the video at least could show the storm it is claiming to show.

This is exactly the kind of content a newsroom might find itself wanting to republish during an event where there is a high demand for news, like a dangerous storm or local emergency. Unfortunately, events like natural disasters are also ripe for misinformation. A manipulated photo of a shark swimming on a highway is notorious for how many times it gets posted from a supposedly recent storm every time there is flooding.

This is all the more reason to vet videos (and photos) before you even do something as simple as repost them on a newsroom’s social media, let alone use it as a source for a story. Videos can act as misinformation in several different ways: They can be digitally edited, as in the case of the shark; accurate footage that is misrepresented or taken out of context; or even falsely created entirely, as in the case of deepfakes.

Deepfakes are videos that have been digitally altered, most commonly to make a human subject look like they are doing or saying something they didn’t actually do. Many researchers and practitioners in the anti-misinformation space consider deepfakes to be an overblown concern, but I think they serve as an especially condensed example of how to assess video misinformation.

Bellingcat, the open source investigation group I mentioned earlier, recommends experimenting with a deepfake detector created by an AI company called Sensity. The invite-only tool attempts to detect digitally altered faces using a proprietary AI system, which basically means they’re not going to tell us how they do it. I think deepfake detecting tools will most likely evolve in usefulness and accessibility over the next few years.

Besides tools made to specifically look at deepfakes, reporters can and should use the same backgrounding skills they use for other media, like photos, documents and human interviews. For example, in that tornado video: What was the weather like that day? Does the town of Lužice really look like that? You can use Wolfram Alpha for the former and Google Earth for the latter.

These are all very broad recommendations, but they tie back to something I also touched on in my column last month: Digital verification is all a game of technological prowess combined with the journalistic mindset that reporters and editors already have.

As journalists, we have very extensive experience in what is basically critical thinking. Looking at a source, whether that be a video, a blog post, an interview or a dataset, always through the eyes of a professional skeptic. Anti-misinformation techniques like InVID are simply the latest technological advancements to assist journalistic critical thinking.