We initially tested the free version of Chat Thing using RJI’s website before partnering with Queen City Nerve on an experiment.

Building a chatbot trained on your newsroom’s content

What it takes: time, money and ethical considerations

After speaking with news organizations about practical applications of generative AI, we were curious if a chatbot trained solely on a newsroom’s content could accurately answer reader questions. Our Innovation in Focus team researched and found several platforms that help people build customized bots without needing coding or technical knowledge.

Chatbots can be a way for readers to engage with continuous coverage of a topic or get specific questions answered. And conversations between readers and a chatbot can help us understand what questions our communities have that we aren’t answering in our work.

At Graham Media, Dustin Block told us how a chatbot via Zendesk helped manage their reader questions and identify more story ideas or tips on their Detroit site. Alex Goldmark, John S. Knight Fellow at Stanford and executive producer of NPR’s Planet Money, shared some of his early lessons while developing a chatbot trained on Planet Money’s archives. For example, Goldmark challenged how we thought about chatbots: The bot wasn’t pulling answers directly from our news stories. It was still imagining or predicting what the correct answer could be, but it had a greater chance of answering accurately because it had learned and stored our knowledge base, the news content. In other words, the chatbot could still give wrong answers if it’s built on generative AI.

But we wouldn’t really know what the possibilities were for chatbots until we tested these ideas in a real newsroom setting. That’s why we partnered with Missouri Independent and Queen City Nerve to test chatbot platforms like Chat Thing and Fini.

We found that many of the platforms worked well in initial test stages, but they sometimes exceeded our news partners’ budgets or the customization of the chatbot became too complicated or the bot would deliver unreliable information. Still, we learned some valuable lessons about testing these tools and the process of building chatbots for news.

Questions we asked while researching platforms

When working with Queen City Nerve, publisher Justin LaFrancois said he wanted to create a central “repository of information” and a “one stop shop” where people can ask questions about a specific topic and find trusted answers from Queen City Nerve.

He also wanted to explore uses for AI that did not include generating content for publication, but instead gave their readers a new way to find and engage with their existing content and the issues they cover.

Similarly, Missouri Independent looked for opportunities to expand on their policy coverage and answer additional questions from readers, even with reporting that didn’t make it into the final article.

To reach these goals, the newsrooms asked the following questions while researching platforms:

- How can we ensure the bot will not spread misinformation or out-of-context content?

- Can the provider or platform scrape our website, or do we need to provide the content directly?

- What audience insights are available: Can we see what questions people are asking the bot, or how often people use the bot?

- How do the chatbot platforms protect the privacy of users and their data?

- Can we see examples of what the user experience is like for each platform before committing to paying for it?

- Are there options to include the chatbot on social media instead of embedded on our website?

- Can we integrate the chatbot into a Slack channel?

- Are there options to include PDF sources or spreadsheets? (For example, city/county budgets, police documents and annual reports.)

- How affordable is the platform?

Comparing pricing, storage, user experience

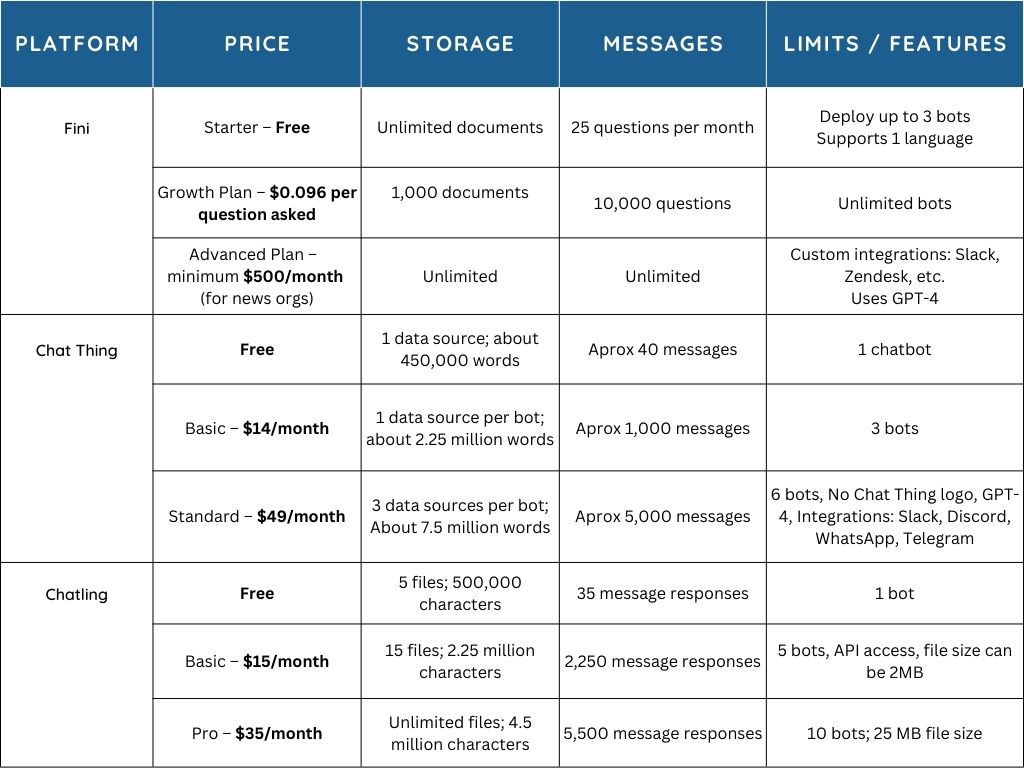

Each of the platforms we researched had varying levels of price plans, which as they got more expensive, allowed users to upload more documents, build more bots or customize and integrate the bots in different ways.

Before deciding which price level to invest in, we tested every platform in a free version. This helped us identify which tools fit the publications’ needs best.

We ultimately decided to scratch ChatbotKit off our list after testing the free version. It was difficult to use — for example, documents were frequently cut off when trying to upload them to a bot.

Chatling, which Queen City Nerve considered, was another that seemed to have a lot of potential: It was updating quickly during the experiment, like recently adding the capability of uploading PDFs. LaFrancois decided not to use it, however, because it did not offer as much storage as Chat Thing and did not have a Slack integration. (Slack integrations are available in the Standard Plan of Chat Thing and the Enterprise Plan of Fini.)

That left us with three platforms to try: Chat Thing, Fini and Manychat.

Queen City Nerve tested Chat Thing (using the Standard Plan) by creating a simple bot and launching it in their Slack space with members. LaFrancois liked that it integrated easily with Slack and he could build the entire bot on his own, as well as monitor interactions with the bot via a dashboard. The biggest challenge with Chat Thing was the bot often struggled to read .csv files with data.

Missouri Independent tested Fini. Unlike Chat Thing, which is more do-it-yourself, Fini builds the bot for you when you move past the free level. We liked that it had controls to ensure the bot stuck to the knowledge base and that it was customizable to go on different platforms besides a website.

In Chat Thing and Fini, the bots can cite the links or sources where they found the answer to a question. That said, both occasionally incorrectly attributed a source when we checked these links. So double check this before you let your readers interact with your bot!

Depending on the customizations you request, Fini may require more time or a higher pricing plan. Our goal was to put Missouri Independent’s chatbot on their Instagram, but the newsroom’s IT and social team didn’t have the time or resources to integrate the APIs.

When we tried to create a bot that would exist on a website, we found that it would require us to pay at least two months of the $500/month Advanced Plan for the creation and implementation of the chatbot.

Still, when LaFrancois looked at Fini’s offers, he appreciated that the Advanced Plan allowed him to build unlimited bots and upload as many documents as he wanted for a set price. He considered finding funding specifically for this.

Then, there was Manychat – an entirely different product. Unlike the more generative AI-based platforms, Manychat allows you to create a controlled conversation flow that exists on social media like Instagram and Facebook. We also loved that the free plan was accessible and didn’t require a credit card.

We tried using Manychat on our Innovation Team Instagram, and it was easy to use and a convenient way to answer common questions. If you’re fielding many FAQs on Facebook or Instagram, this may be a helpful automation tool.

Deciding a topic and scope of the bot

LaFrancois said this was one of the most difficult parts of the project, and most of their planning time was spent going back and forth on trying to determine the scope of the bot.

Queen City Nerve ultimately decided to focus on topics that they cover the most. Local police coverage has been a major focus for the past few years, and they figured the fact-based reporting would be easier to train a bot than narrative features or culture reporting.

In the future, LaFrancois said they would like to use reader surveys to help shape these topics and scope of the bot.

For Missouri Independent, they decided to wait to build a bot until just before the legislative session, creating a more general chatbot where readers could ask civics-related questions about how the local government works, schedules of bills, etc.

Testing the bot in a small group before going live

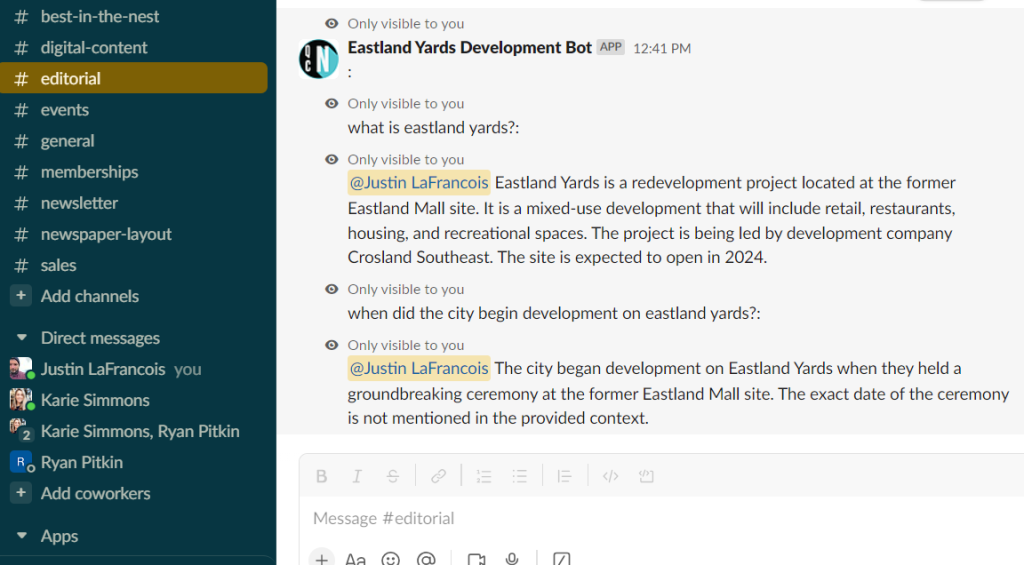

Before building their first full-fledged bot with Chat Thing, Queen City Nerve tested a simple one in their Slack space. They chose to focus on the development of an old mall and pulled links of 50-60 stories about this from their website. LaFrancois then launched the bot in their Slack space and was clear with members that he wanted them to ask it questions as a test.

This gave us a better understanding of how people might interact with the bot and led to some key takeaways:

- The bot doesn’t always add context to its answers. For example, the chatbot answered a question about a specific policy that this community was considering but never acknowledged that the community had rejected this policy until directly asked.

- While these readers may not be as knowledgeable about the specific topic as the reporters covering it, they are also loyal members and active in their communities so they asked questions that are not answered in the original content.

- The bot appropriately said “I don’t know” when a question was asked that wasn’t in the content shared with it by the newsroom.

This testing process took about five hours spread across a week, though integrating it with Slack only took minutes, according to LaFrancois. It also helped reveal some challenges with uploading spreadsheets and PDFs, especially if the text is displayed as images, into Chat Thing. LaFrancois suggests starting with links to articles when testing a bot in this tool.

Giving guidance to give your readers

Seeing how readers interacted with the bot in this test Slack space also helped inform how Queen City Nerve plans to communicate the bot to their community. LaFrancois plans to create a prompt guide that will help readers make the most of the chatbot. He is looking to include some information about how the newsroom plans to use AI, as well as develop a more comprehensive policy that explains what sources they choose to train the bot and why.

Sign up for the Innovation in Focus Newsletter to get our articles, tips, guides and more in your inbox each month!