Election night mayhem with an AI twist

An automation and AI experiment for newsrooms

The views expressed in this column are those of the author and do not necessarily reflect the views of the Reynolds Journalism Institute or the University of Missouri.

Newsrooms on election night are always chaotic — and stressful — as journalists watch results trickle in from counties batch by batch. It takes a small army of people to capture those municipal and county results and update the tallies online so voters know who won and lost in real time. For a regional news outlet like Bay City News covering 13 counties, it’s a heavy lift to manually update those local numbers from the time polls close at 8 p.m. until county election offices shut down in the early morning hours. We wondered if AI and data-scraping tools could efficiently pull information on election night even if each county presented their ballots with different formats, platforms and data. The answer? Yes and no.

What worked

Before the election, we used data scraping and enhanced AI to pull into a spreadsheet key fields such as name, occupation and office for the races and the measure title and description for the ballot propositions. From there, BCN intern Ciara Zavala applied code created by Chat GPT and Claude (aka “Claudia” to us) to the spreadsheet data in order to pull it into a user-friendly dashboard on our Civic Engagement Hub. We successfully created a user-friendly place for readers to easily find — through scrolling or search — the races and measures that mattered to them.

What sort of worked

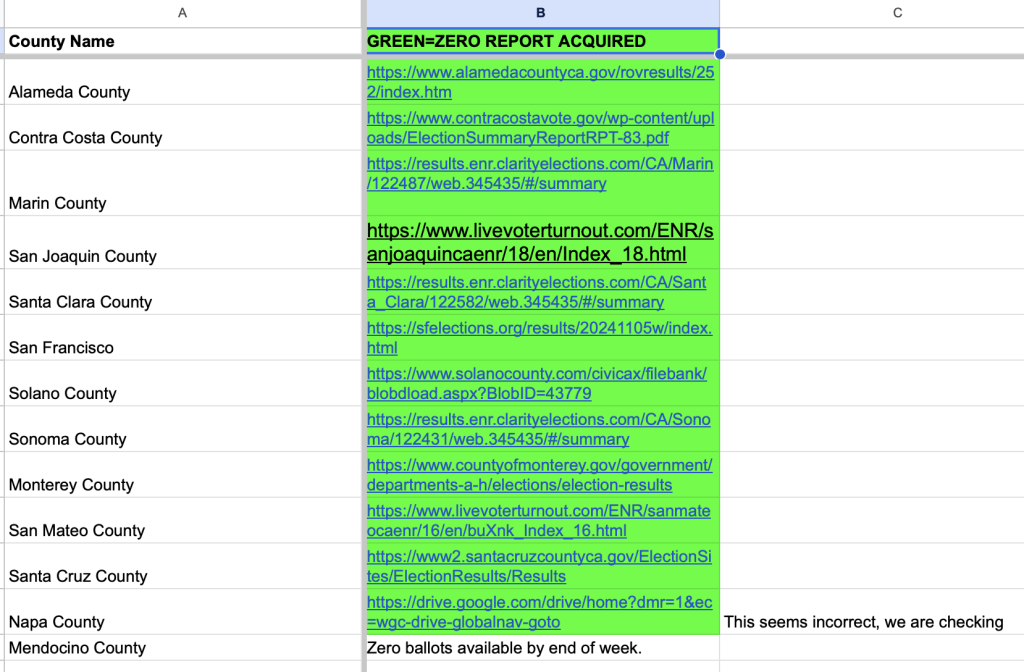

We did a lot of advance planning and research so we could be organized and prepared for election night — but it was not enough. The lack of standardization from 13 registrar offices created significant hurdles for news outlets like ours. (This is a common challenge across the state and country.) Two common software services — Clarity Elections and Live Voter Turnout — are used by some counties, but bespoke, one-off systems are used by others. Registrars are not obligated to present election results in a uniform format, making it a recurring struggle to gather consistent data each election cycle. In addition, some may use the same format each election cycle, but not always; and, of course, the content itself changes with each slate of candidates and measures.

One major hurdle was the difficulty of collecting “zero ballots” or test results from county registrar offices within a short timeframe. Zero ballots serve as the templates county registrars use to present election results when polls close and results get tabulated. These formats were critical for us to see in advance because we had to direct our data scrapers to pull election results from county websites in certain ways with specific code.

During the weeks leading up to the election, we contacted all 13 registrar offices by phone, email and social media to track down these zero ballots. It was a painstaking process that felt like pulling teeth during an already high-pressure time. Some offices released their zero ballots a week before Election Day, while others waited until the day before — or even 8 p.m. on election night. That left little or no time for testing. In the end, we still did not have zero reports for two counties, Mendocino and Napa, when polls closed.

Ideally, we should have been able to test our code against all counties to troubleshoot before deadline. We were overly confident that it would work in all 13 cases when results went live.

What didn’t work on deadline

In the first data scrape for election previews, we successfully gathered all the ballot information and populated it through our AI-coded spreadsheet. However, that data scraping team could not guarantee real-time scraping on election night. As a result, we pivoted to a different data scraping team that attempted to retrieve the same content using a different code. On election night, we tried to write a program with AI prompts to gather real time results as reported by each county and then auto-populate the dashboard templates in order to avoid the typical manual data entry.

Unfortunately, the new code kept breaking and failed to populate correctly. Several problems emerged as these sites updated their information every 10 minutes to one hour and each county site had different nuances to their results reporting. Some required you to open a separate tab for the race you were interested in so directing the code to open some tabs but not others created inconsistencies. In addition, some sites were organized in unique ways by placing candidate names in a different order to reflect vote totals so instead of just the numbers changing in one place, both the names and the numbers changed location.

Although we prepared as best we could by using a collection of website scraping tools, Claude and ChatGPT enhanced design structures via prompting, and general HTML and website analysis tools, we could not process the changing layouts of each individual county website. With more time, this could likely have been perfected but not within the hour we had given ourselves to start reporting results.

Below is the initial prompt used as the basis for the code editor to do continuous scraping on election night.

Hi Claude! Can you please help me with a project to consolidate election results from 13 Bay Area counties for a local news agency? The input will be a collection of links to the counties’ official election results sites. The output should be a Google Spreadsheet with a tab for each county. Since the data isn’t formatted consistently across all links, we’ll need to create a process for each one. Fortunately, some of them overlap. We can break the project into four sections. If you have a better suggestion for structuring the workflow, please share it.

- Retrieving data from the sites

- Parsing and formatting the data for proper output

- Temporarily storing the output

- Writing the data to the Google Spreadsheet

After this step and a few clarifying Claude prompts, the programmer attempted to work with each county and scrape the sites every 10 minutes. But each time we applied the code for one county to another county, it broke. It may have been that results were changing too quickly and we could not keep up. It may have been that the websites were too different to have a single bit of code work on multiple templates.

What we did to pivot

By 8:05 p.m., counties were starting to release the first batches of mail-in ballot results that had been counted and readers were looking for results. We did not have them!

We could not populate any of the pages on our Civic Engagement Hub with results. Fearing that we would lose readers, we decided to pivot and put links to the live county sites behind our public-facing design. That meant that a reader could click on their county (for example, Alameda), and scroll to find their race of interest (such as Mayor of Berkeley) via the official government results page. What they couldn’t do was see our user-friendly dashboard or use our search bar to find what they were looking for.

Chances are, readers never knew anything was wrong. In fact, several told us later, “Thank you for the election results you presented. It was an easy way to find out what was happening on election night.” But we knew it was not the seamless presentation we were hoping for — and we kept trying to fix the code until almost 2 a.m.

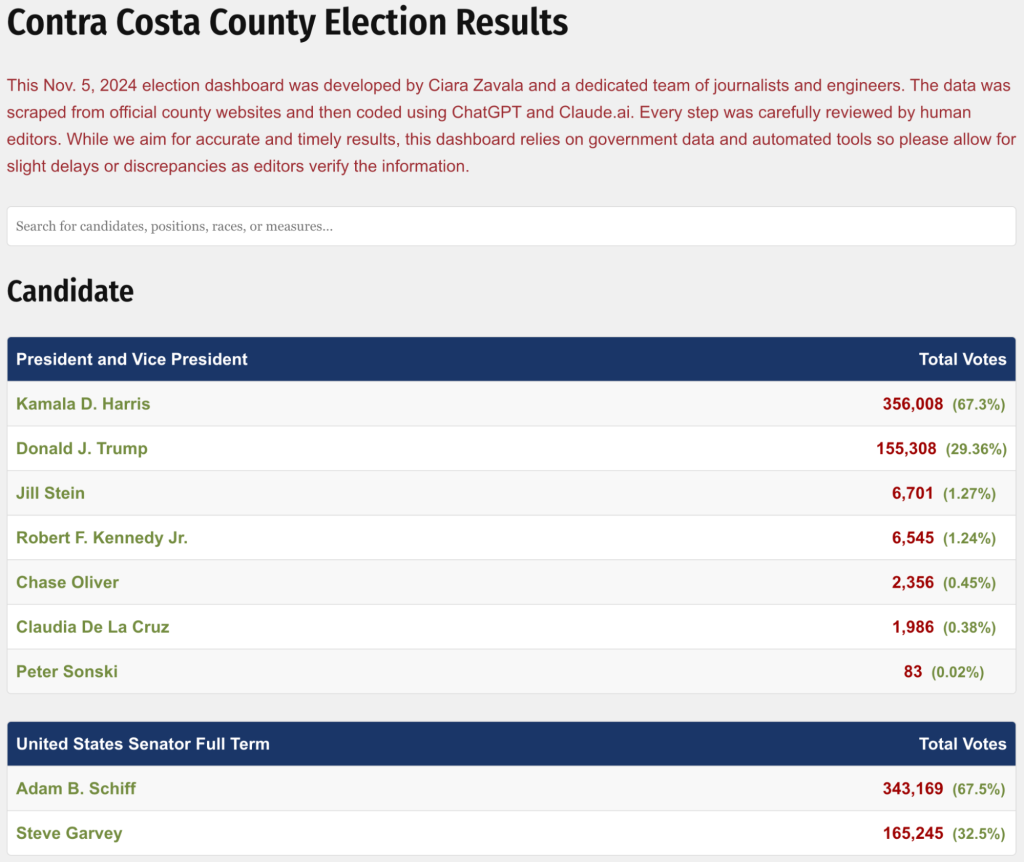

Although we had to abandon the live coding experiment that night, we did go back and apply the earlier method of scraping static websites to display the final, certified results on Dec. 5, with our dashboard. Here’s what the top of the section looked like for Contra Costa County as an example, starting with the national races and flowing down through state, county and municipal contests.

Summary

Ultimately, the project achieved our primary goal — even with the election night challenges: centralize information from the greater Bay Area on an easy-to-use dashboard and use AI tools to make the process more efficient. The results were not perfect, but it is noteworthy that just a couple of weeks after the election, new and better tools became available that might have solved our election night hiccups. More sophisticated scraping tools can now identify specific types of information regardless of where it is positioned on a PDF, csv or html document and be instructed by AI to populate our spreadsheets. We know the AI tools are going to continue to evolve rapidly and our experiment gave us a workflow to replicate for future elections.

The results of our teamwork, done with support from the Reynolds Journalism Institute, can be seen in two places: our “Civic Engagement Hub” and our “Experiments in AI” sandbox.

Next up (Article #3): Recommendation for the future.

Cite this article

Rowlands, Katherine Ann; and Zavala, Ciara (2025, Jan. 22). Election night mayhem with an AI twist. Reynolds Journalism Institute. Retrieved from: https://rjionline.org/news/election-night-mayhem-with-an-ai-twist/

Comments