What crowdsourcing 10,000 pages of government records has taught

A little over a year ago, we launched our new MuckRock Assignments tool to help crowdsource analysis of large document sets. Since then, more than 1,000 volunteers have helped newsrooms and non-profits (including MuckRock) analyze more than 10,000 pages of documents.

This work was supported by a Reynolds Journalism Institutional Fellowship, which has helped us think through our product features as well as how we can best empower newsrooms to connect with their communities, and by the Knight Foundation and Democracy Fund.

Here are some of the lessons we’ve learned going through the first 10,000 submissions, and where we want to take our crowdsourcing tools next.

Start simple and keep iterating

The very first version of MuckRock Assignments launched in January 2012, and at that point it was a very manual user participation tool: We wrote up a few things we wanted help with, and users emailed in to “complete” a given assignment.

It was very low tech but provided an easy way to let users participate in our work and earn free requests and access on the site. Then came the ambitious Whitewash iOS prototype, which let users swipe on sample data to help categorize requests. While we never ultimately published the iOS app for general use, the backend of that app powered an internal crowdsourcing system that was critical to helping us scale up our work, and would set the stage of our Assignments tool.

Those same lessons — of building, testing, and iterating — apply to successfully running crowdsourcing campaigns on Assignments.

Make it easy to both contribute…

One thing we’ve seen over the years is how dependent project success is on turning reader enthusiasm into action in a simple, understandable way. This means sharing a lot of context around any asks, as well as making sure they understand what they’re supposed to be doing

There’s a few basic tips when setting up questions around a crowdsource campaign: few questions, higher response; fewer mandatory fields, less chance a user will be put in a spot where they can’t move forward.

But there were some more surprising finds for us. For example, having a landing page or article explaining a lot of context greatly improved response rates, rather than just sending people directly to the page where they do the work.

Here’s an example of one of those reader response articles in action.

We’ve also continued to make tweaks to the platform, embedding Assignments right within an article with an IFRAME, so a reader doesn’t even have to click away to participate.

…and to manage contributions

But as important as it is to make it easy for people to help out crowdsourced projects, it’s equally important to make it easy to experiment and launch new Assignments so that you can try new ideas or respond quickly to topics of broad interest.

We made a drag-and-drop Assignment creator so that it’s easy to add and tweak questions.

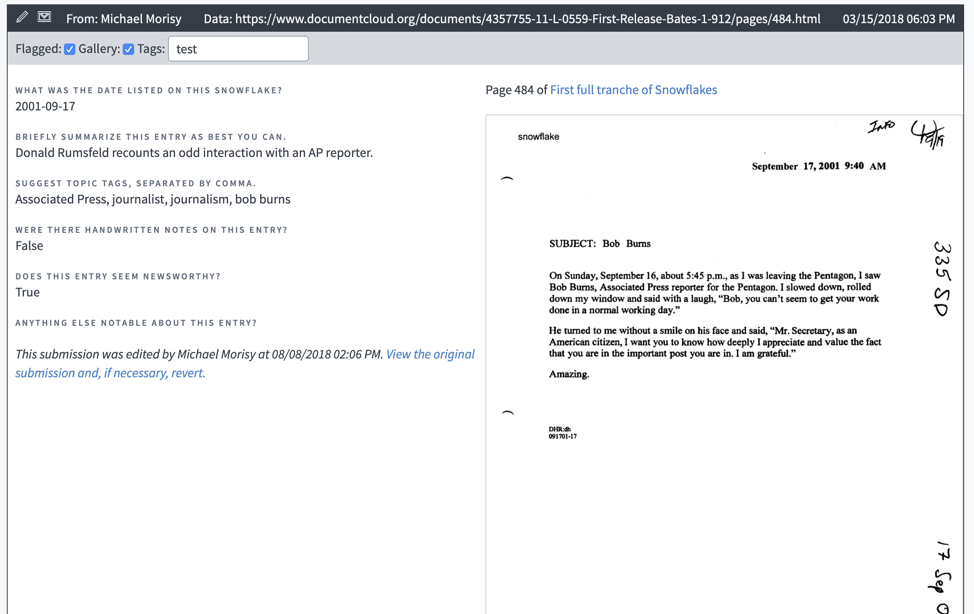

We’ve also added additional features so that you can review responses in a variety of ways, from getting an email every time a new entry is submitted; downloading data in a CSV for statistical analysis; or exploring submissions with a real-time search interface that lets you flag for further review.

We also added the ability to edit submissions, follow up with contributors with just a click, and other tools that make it easy to manage an influx of data when a successful crowdsourcing campaign finds its audience.

Where we go from here

We’ve been really excited by the uptake on Assignments, both from contributors and newsrooms participating in our beta program. So far, users have created over 100 different crowdsourced campaigns (including campaigns that don’t involve documents but ask for user-submitted tips or ideas).

There’s three key areas we want to focus on in the next year:

- Making it easier for newsrooms and non-profits to organize and manage their relationships with readers, and to understand the benefit of working with their audience to tackle large stories in a collaborative, transparent way.

- Using machine learning to increase the impact that these contributions have, by making it easy for local news organizations to train and deploy their own models based on data they already have tagged.

- Doing a better job highlighting and building a community around the various open Assignments, and showcasing how anyone can help build a more impactful, sustainable culture of journalism and transparency in the communities and topics they care about.

The good news is that, even in its closed beta, our crowdsourcing tools have taken off in ways that we couldn’t have predicted, and our recent survey found over 70% of MuckRock are interested in finding new ways to contribute to projects they care about.

If you’re interested in building stronger relationships with your readers — or just finding better ways to sift through endless piles of PDFs — take a look at some of our open Assignments and then get in touch. We’d love to work with you.