Photo: substanz | Canva

Resources to support newsrooms’ responsible use of and reporting on algorithms

An annotated directory of how-to guides, exemplary journalism and policies — plus a cool online game

There’s so much information about algorithms and their social impacts that it’s sometimes hard to know where to begin or how to focus. Of course, Algorithmic Literacy for Journalists (my RJI Fellowship project) is intended to help reporters and newsrooms navigate that torrent of information. But beyond the specific resources developed for this project, we also recommend the following sources for those who want to dive deeper into the space where algorithms and journalism intersect.

This resource guide is organized into three topics — including how-to guides, exemplary news reports, and policy and regulation — with annotations to highlight the most important and practical aspects of each resource. This guide is not exhaustive; if you know of additional resources that ought to be listed here, please contact us.

How-to guides

A Checklist of 18 Pitfalls in AI Journalism, by Sayash Kapoor and Arvind Narayanan, September 30, 2022. Based on reports published by outlets like the New York Times and CNN, this brief guide identifies recurrent problems—such as attributing agency to AI or treating company spokespersons as neutral parties—that mislead the public. Recognizing these “pitfalls” can help journalists avoid them in their own reporting. (Kapoor and Narayanan’s checklist was the original inspiration for Algorithmic Literacy for Journalists.)

AI and Responsible Journalism Toolkit, Tomasz Hollanek, et al., DesirableAI, 2023. A comprehensive, evolving resource organized into four main areas: education, ethics, voices, and structures. With a goal of halting the spread of problematic AI narratives while fostering inclusivity and diversity in discussions about AI, the toolkit includes specific guidance for journalists and news reporters and positive examples of reporting on AI. Highly recommended.

Reporting on Artificial Intelligence: A Handbook for Journalism Educators, Maarit Jaakkola, ed., UNESCO International Program for the Development of Communication (IDPC) and World Journalism Education Council, 2023. Artificial intelligence (AI) means different things to different people. Across seven modules this guide covers everything from defining AI to cultural myths and patterns of storytelling in reporting about it.

Your Newsroom Needs an AI Ethics Policy. Start Here, Kelly McBride, Poynter, March 25, 2024. How to ground newsroom implementation of AI in the core journalistic principles of accuracy, transparency, and audience trust. Includes specific guidelines for privacy and security, customizing GPTs, and promoting audience AI literacy.

Better Images of AI: A Guide for Users and Creators, Kanta Dihal and Tania Duarte, Leverhulme Centre for the Future of Intelligence and We and AI, 2023. Designed for artists, educators, and activists, this guide will also be useful to reporters concerned with how biased visual representations of artificial intelligence shape false public perceptions of AI. Based on a year-long study of better ways to create images of AI, involving more than 100 experts from the tech sector, media, education, research, policy and the arts, the guide shows how to source and create images that communicate accurately and compellingly about AI.

Tips for Posting Art, Don’t Delete Art, updated December 19, 2023. Although mostly focused on challenges faced by artists who post their work on social media platforms, this guide includes tips that also apply to journalists and newsrooms, including especially those using social media platforms such as Instagram and Facebook.

Introducing 5 AI Solutions for Local News, Nicole Meir, Associated Press, October 10, 2023. From automated writing of public safety incidents to creating transcripts of city council meetings that include keyword identification and reporter alerts, this report features AI applications of direct relevance to local news organizations.

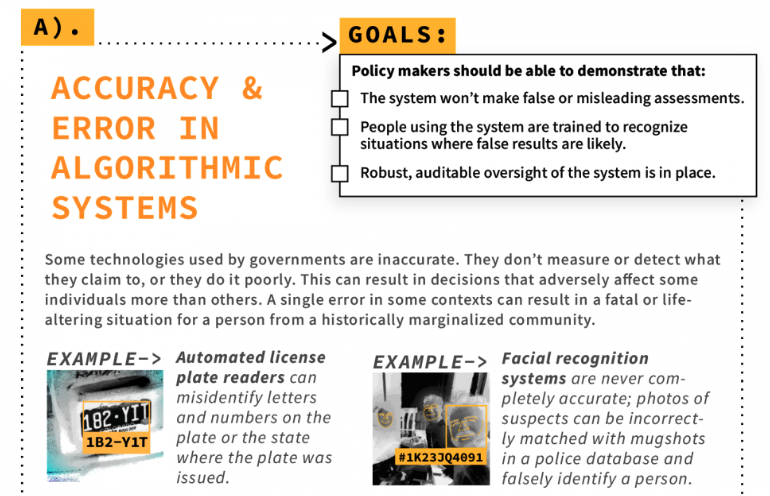

Algorithmic Equity Toolkit, ACLU Washington, May 2020. Primarily designed for grassroots activists, the toolkit helps its users to identify and understand AI tech used by government agencies (including law enforcement), to ask critical questions about that technology’s social impacts, and to establish oversight mechanisms. The clear and precise set of questions to ask policymakers is ready for use by journalists.

Learn while playing

Try your hand at Algorithm Watch’s online game, Can You Break the Algorithm? (October 11, 2023). You’ll play the role of a journalist tasked by a tough editor with determining whether the algorithm used by TikTube, a fictional social media company, makes teenagers sad.

In about ten minutes, your character will pursue answers from regulators, scholars, and industry in an attempt to file a strong story on deadline. This entertaining game offers useful lessons about the challenges of algorithmic accountability reporting.

Exemplary reporting

Machine Bias: Investigating Algorithmic Injustice, ProPublica series, September 1, 2015 — December 13, 2019. Reporting by Julia Angwin and colleagues, including the article Machine Bias, on racially biased software used in criminal sentencing, which many experts cite as the beginning of what is now known as algorithmic accountability reporting.

Google Elevates Anti-LGBTQ News Sites, Matt Tracy, Gay City News, October 9, 2019. Good example of a DIY algorithm audit, exposing how Google promotes what Tracy described as “some of the web’s fringiest alt-right, anti-gay news websites” in response to searches for LGBTQ news.

How Advertisers Defund Crisis Journalism, Ben Parker, The New Humanitarian, January 27, 2021. How advertising blocklists are “undermining corporate social responsibility and effectively punishing publishers for reporting on international crises.”

Google Has a Secret Blocklist that Hides YouTube Hate Videos from Advertisers—But It’s Full of Holes, Leon Yin and Aaron Sankin, The Markup, April 8, 2021. Google Ad’s blocklist missed dozens of hate terms used by White supremacists and White nationalists. First of two-part series, with Yin and Sankin’s follow-up report here.

Removing Bias from Devices and Diagnostics Can Save Lives, Cassandra Willyard, Scientific American, November 2024. How medical researchers and clinicians are redesigning algorithms to address deep-rooted racial biases in medical diagnoses. The Association of Health Care Journalists published an interview with Willyard about her investigation.

How We Investigated Shadowbanning on Instagram, Tomas Apodaca and Natasha Uzcátegui-Liggett, The Markup, February 25, 2024. Since the Israel-Hamas war began, Instagram has “heavily demoted” non-graphic war images, deleted captions and hid comments without notification, suppressed hashtags, and limited users’ abilities to appeal moderation decisions.

In addition to these specific reports, two sources provide ongoing coverage that matters:

Generative AI in the Newsroom (GAIN), is a collaborative effort to explore responsible use of generative AI in news production. GAIN is edited by Nick Diakopoulos and Jeremy Gilbert and was launched on Medium in January 2023 by the Computational Journalism Lab at Northwestern University with support from the Knight Foundation.

The Solutions Journalism Network’s Story Tracker includes a running collection of solutions reporting focused on Algorithms, Artificial Intelligence and Machine Learning.

Policy, regulation and standards

Paris Charter on AI and Journalism, November 10, 2023. Ten principles to “uphold the right to information, champion independent journalism, and commit to trustworthy news and media outlets in the era of AI,” issued by a 32-member commission representing journalists from twenty countries, coordinated by Reporters Without Borders, and chaired by Maria Ressa, the 2021 Nobel Peace Prize laureate for journalism. The Charter’s principles include a call for journalists, journalism outlets, and support groups to “play an active role in the governance of AI systems.”

Remarkably, despite the Charter’s significance, it received nearly no news coverage beyond reports by signatory organizations. Among English-language outlets, only the Voice of America published an original report, while not a single national US news organization appears to have even mentioned the Paris Charter.

2023 Landscape: Confronting Tech Power, AINow, 2023. Overtly partisan in its commitment to “confront tech power,” AINow’s report makes clear that “nothing about artificial intelligence is inevitable.” Instead, the report makes the case that the development of AI technology concentrates economic and political power in the hands of the tech industry, with serious social, political, and economic impacts for society. One of the report’s four strategic priorities for action is algorithmic accountability, which includes roles for investigative journalists.

Generative AI in Journalism: The Evolution of Newswork and Ethics in a Generative Information Ecosystem, Nicholas Diakopoulos, et al., AI, Media and Democracy Lab and Associated Press, April 2024. Based on a survey of nearly 300 news editors, executives, and reporters from North America and around the world, the authors report on how news organizations are addressing the practical and ethical issues involved in using generative AI responsibly.

AI Risk Repository: A comprehensive database of risks from AI systems, MIT, August 2024. Although not specifically focused on journalism, MIT’s living database of more than 700 documented AI risks, categorized by their causes and domains, is a vital resource for journalists covering the latest developments in AI technology. The site provides an accessible overview of what its developers call the “AI risk landscape” and they consistently update it to include the latest research. Follow this link for a two-minute introduction to the database.

Cite this article

Roth, Andy Lee; and anderson, avram (2025, Jan. 7). Resources to support newsrooms’ responsible use of and reporting on algorithms. Retrieved from: https://rjionline.org/news/resources-to-support-newsrooms-responsible-use-of-and-reporting-on-algorithms/