Digital collage by Aura (Auralee) Walmer, images from Public Domain.

Customization is key to a compelling sonification

Learn how to create your own story-driven sonification

Sonification workflows: There’s more to the story

Have you tried data sonification yet? Luckily, with many sonification tools emerging in recent years, it’s becoming much easier to transform data into sound. But creating a compelling data sonification project requires more than simply grabbing the output from one of these tools and calling it a done deal. Customization and intentional production is key for capturing the attention of listeners. This is where the process of audio editing plays a truly vital role.

This month, the focus is sonification customization. By integrating context, narrative storytelling, sonic vocabulary (training the listener), and/or aesthetic elements, a sonification designer can craft a listening experience that is genuinely memorable.

For journalism in particular, telling the story behind the data is necessary — and supplementing sonification with context is what makes this possible.

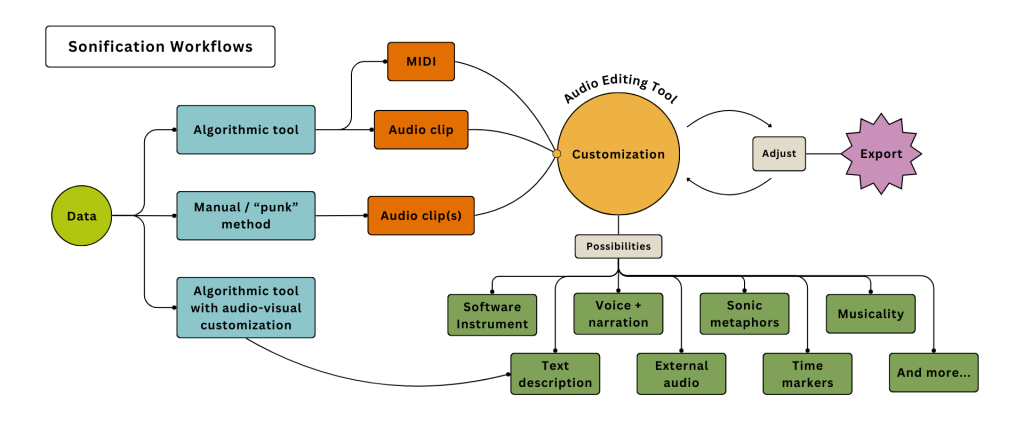

Here is a general workflow for sonification:

- Data — Selecting and preparing data

- Tool/method — Using a sonification tool or method to convert data to sound

- Audio artifact — Generating an audio clip or MIDI file

- Customization — Implementing design and context to craft a story, and getting feedback for adjustments

- Export — Sharing the sonification with an audience

- Optional: visual accompaniment or text description

Some options for customizing your sonification:

- Adjust your musical instrument. If you’re working with a MIDI file, try out different software instruments in your audio editor. Pick an instrument that fits the overall feeling and goal of your sonification project.

- Record your voice. With narrative elements, you can introduce the listener to the data, why the topic matters, what sounds to expect, and what certain sounds indicate.

- Bring in external audio. You can include recordings of people you’ve interviewed, news story segments, snippets of music, or other clips to build out the composition.

- Use sonic metaphors. The use of an auditory icon can be helpful to indicate an event, such as a historical moment, or a notable change in the data.

- Consider a time marker. If you’re working with time-series data, consider what sound is communicating the rate of time. This could be a voice subtly mentioning a date, or a tempo track marking time segments.

- Add a text description. Sometimes it’s helpful to provide a written explanation to accompany your sonification to provide context. Not only can screen readers access this text, but listeners can reference it as a contextual listening guide as well.

Training the listener

Jordan Wirfs-Brock, an avid sonification developer, researcher, and professor at Whitman College, is a proponent of training the listener in sonification. She emphasizes the value of introducing components of a sonification prior to presenting the piece as a whole. Check out slide 19 from this presentation by Jordan, all about “learning to listen.” Some methods for training the listener include: narrative explanation, breaking down components, and speech-based annotations.

Have you ever listened to the podcast Song Exploder? In this podcast, the host Hrishikesh Hirway interviews musicians to reveal the origin story behind a song, introducing the isolated stems in a chronological story. This gives the listener a chance to become familiar with the components of the song before hearing the macro composition. By the end of the episode, the entire song is played, and all of those parts come together in illuminating harmony. Sonification storytellers can take a similar approach with their audiences, presenting the isolated aspects of the audio and attaching meaning to each of them. This listener is thus armed with the “vocabulary” needed to absorb real meaning from the sonification.

Sonification demo: The sound of New York City evictions

This is a step-by-step workflow that you can try yourself!

The idea of using “code” to generate a sonification may sound daunting to some folks. But there’s no need to feel intimidated. This month, I created a sonification using Python and audio editing software, adapting Matt Russo’s approach in his Python sonification tutorial to a different data set. If you’d like to try this approach, you can follow along with the following steps. I’ve provided all the materials needed for replicating this project in this Github repository.

Step 1: Find the data that will drive the sonification

For inspiration, I often turn to Data Is Plural, a “weekly newsletter of useful/curious datasets,” edited by Jeremy Singer-Vine. While exploring some recent editions, I came across New York City eviction data, which caught my interest. Taking a closer look at the data from NYC OpenData, I noticed that it took on an interesting shape: evictions reached particularly high levels 2017 through 2019, dropped drastically during the COVID-19 pandemic, and have increased steadily since then. Given how distinct this behavior is, I thought it would be ideal for a time-based sonification.

Step 2: Convert CSV data to a MIDI file using Python

Dr. Matt Russo — astrophysicist, musician, and educator — uses sonification to “convert astronomical data to music and sound,” making astronomy more accessible for the visually-impaired. His TED Talk, “What does the universe sound like? A musical tour” is worth checking out.

Russo created a sonification tutorial (Sonification with Python – How to Turn Data Into Music w Matt Russo) that I’ve been wanting to try for quite some time. I followed the steps in this tutorial, adapting the process to the NYC Evictions data that I prepared.

The process takes data and converts it to a MIDI file, a format which is readable by audio interfaces. The MIDI file includes adjusted parameters for pitch and velocity that will transfer to the audio editing stage. My version of the Python script (‘nyc-evictions-data2midi.py’) and the materials needed to run it (‘nyc-evictions.csv’, ‘functions.py’) are available in the GitHub repository. (Note: there are some issues installing the audiolazy library, so make sure you add ‘functions.py’ to your working folder, which contains the function needed!)

If you’re not comfortable with using Python in an integrated development environment (IDE), you can also run this code on your web browser to generate the same results! Check out the folder called “Jupyter Notebook Method” in the GitHub repository. Download the contents of this folder, and upload the files to a space in Jupyter Notebook.

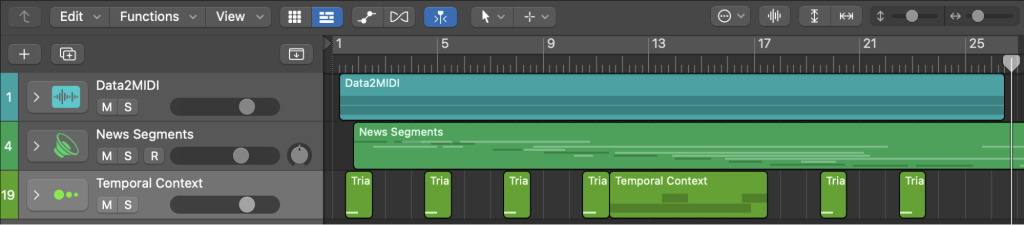

Step 3: Customize the sonification in an audio editing tool

At this step, we’ve generated a MIDI file (‘nyc-evictions.mid’) which can now be adjusted in an audio editing tool. MIDI is highly customizable because you can apply any software instrument to it, adjust the tempo, and edit the notes. My audio editing tool of choice is Logic Pro X, but you can use any software that you’re comfortable with. Some alternatives include GarageBand (for Mac users), Audacity (free downloadable software), and Soundation (free web-based audio editor).

Here are the ways I customized my sonification:

- Software adjustment: I selected an electric piano that sounded powerful and responsive to the velocity changes.

- External audio: I collected news segments from YouTube videos and podcasts related to the important events that occurred along the timeframe.

- Time marker: I added a triangle instrument to indicate the passing of each year. I also included cricket sounds during the pandemic moratorium time segment.

- Narration: I wrote a narrative script and recorded my voice to explain the story and components of the sonification.

(Note: I got feedback from peers and made adjustments along the way.)

Step 4 [Optional]: Create visual accompaniment

To provide a visual accompaniment that I could embed in this article, I created a data visualization of the data in Flourish, and I used a video editor to animate a set of visual slides aligned with the timing of the sonification. I decided to make a “video” version of this sonification because I find that YouTube is very accessible for sharing content with others.

You can choose to stick with the audio-only version if you like — it all depends on how you intend to apply and share your project.

Step 5: Export and share

Time to share the sonification! If you’ve followed along with this tutorial, perhaps you’ve used different data and made your own customizations.

Here is how my NYC Eviction Sonification turned out:

(Audio-only version without narrative explanation available here.)

Example of the month

This month’s featured sonification example is fact many examples in one. The Loud Numbers Sonification Festival was a live-streamed event on June 5th, 2021. It was hosted by the creators The Loud Numbers Podcast (Duncan Geere and Miriam Quick) in celebration of the launch of their first podcast season. It is loaded with phenomenal sonification content, featuring several presentations as well as Q&A sessions with sonification creators. It is incredibly insightful to hear the process behind various sonification projects from a variety of researchers, journalists, and audio enthusiasts. This video will surely ignite inspiration for anyone interested in turning data into sound and music.

Cite this article

Walmer, Aura (2024, Dec. 3). Customization is key to a compelling sonificationbout design-conscious sonification. Reynolds Journalism Institute. Retrieved from: https://rjionline.org/news/customization-is-key-to-a-compelling-sonification/