How to detect disinformation created with AI

A step-by-step guide with examples

When creating a guide to assist journalists with day-to-day tasks, above all, that guide needs to be useful. It must be clear, direct, and practical; explaining what to do, step by step. That way we have the information handy for when we find ourselves in an urgent situation where we need to publish quickly and accurately.

As we mentioned in our first article, at Factchequeado we conducted a survey (in English and Spanish) with our media partners, and their responses are helping us shape this guide. We asked them what tools and skills they need to improve their reporting and combat disinformation. As expected, there was almost unanimous agreement that “How to identify whether a picture is fake or created with AI” as a necessity.

As of September 2023, we have two pieces of news: one bad and one good. Should we start with the bad news? In 2024, we will have the first elections in the United States with the use of AI, without clear regulations or rules for political campaigns or social media, as Laura Zommer, one of the co-founders of Factchequeado, wrote. And we are already seeing AI in use by some candidates.

In the case of the video titled “What if” (Biden is re-elected)…, published on the Republican Party’s (GOP) YouTube account, apocalyptic images are used, including bombings, long lines to find supplies, destruction, and desperate and disoriented people. All of this is created with AI to depict great chaos “if Biden were to win the elections again.” In these images, you can read in small letters at the top of the screen, for 4 seconds, that it is a creation of AI: “Built entirely with AI imagery.” In the post’s description, it also states: “An AI-generated look into the country’s possible future if Joe Biden is re-elected in 2024.”

However, we also have seen the use of images created with AI without any disclaimer that they are not authentic images designed to promote a partisan message. For instance, it was used by the campaign of the Republican candidate aspirant, Ron DeSantis, to show Donald Trump hugging the pandemic’s chief doctor from the White House, Anthony Fauci, in a critique of former President Trump (one of his competitors in the Republican Party primaries) for not firing Fauci during his term.

So, what’s the good news then? The good news is that, up until now, many of the tools we rely on as journalists, such as detailed observation, constant doubt, critical evaluation of context, and fact confirmation from official and alternative sources will allow us to unearth the truth.

Claire Wardle, co-director of the Information Futures Lab at Brown University, was consulted by Discover Magazine about how to detect images created with AI. She explained: “Yes, there are small clues that you can see in the image, but just think and do your research.”

She also used a comparison to describe what happens with disinformation that uses AI: “There’s amazing things coming out of this new technology… But it’s a bit like if a faster car comes along; we have to drive a bit more carefully.”

So, this “step-by-step” evaluation to detect AI content will be part of our guide for journalists, with examples, links, and images, on how to drive with great care and detect this type of disinformation.

Warning: Following just one of these steps doesn’t necessarily lead us to a solution; usually, it’s the combination of them. Sometimes, even then, they don’t provide certainties but rather, generate more doubts. And when in doubt, the recommendation is to not publish or amplify potential disinformation.

1. Observe, but not just the image

When dealing with images circulating on social media and wanting to verify their authenticity, it’s best to take a screenshot and zoom in. However, don’t make the mistake of going straight to the image without considering the context and other details. For example, in this case, looking at the hashtags (#) used by the account that posted the image of the Pope with the LGBTQ+ flag, you could see #midjourney and #midjourneyv5, which are the names of tools used to create such images using AI. You may also find references (sometimes watermarks) from similar tools like DALL-E and Stable Diffusion.

2. Search for the source of the image

Finding out the origin of the image or who the first person was to publish it is crucial. You can use a search engine like Google, search tools on X (formerly Twitter), or link archivers like archive.li or Wayback Machine, which help locate deleted links.

It’s worth asking questions like, does this “photo” come with the credit of a photographer or news agency? Is there an identified user? Can we learn more about them? What do they say? Do they identify themselves as image creators or someone who makes parodies? We can even reach out and ask them questions about the content they posted.

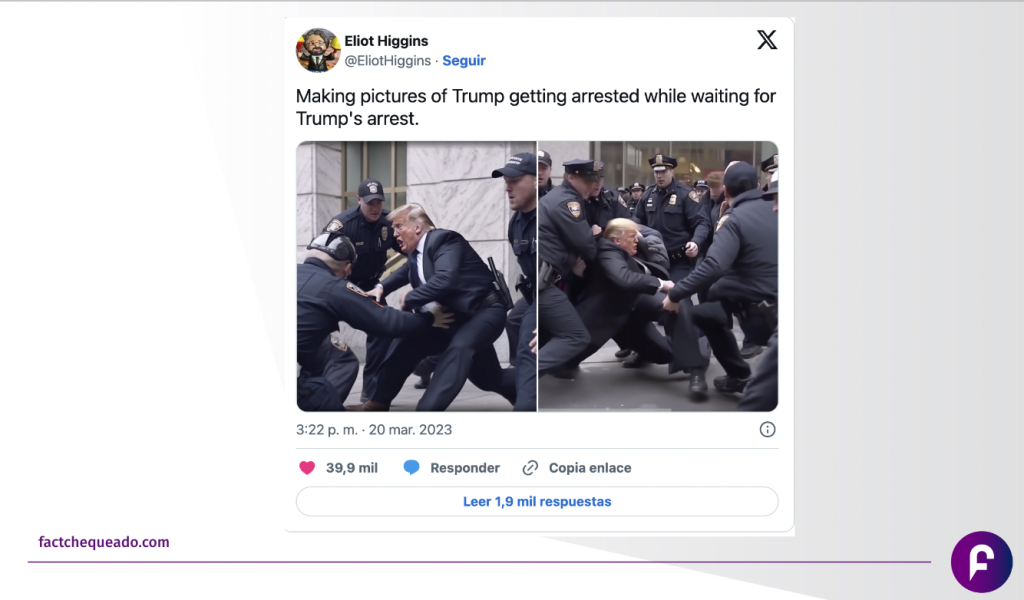

The case of the viralized image of former President Trump supposedly being arrested on the streets of New York is another example of how carefully reading the original post provides us with information. In this scenario, the image’s creator mentioned that they had made it “while waiting for Trump’s arrest.”

3. Use your journalistic instincts

Ask yourself questions based on your skills in the profession: if an event of such magnitude occurred, it should have been covered by news agencies, like a large demonstration, the arrest of a former president, or an extraordinary appearance by a personality like the Pope. Is this information published on the official account or page of the person at the center of the news? Have local media or outlets that typically cover such events reported on it? Do we know the original source of the information? If it appears to be a well-known media outlet, is that their actual logo and colors?

4. Conduct a reverse image search

Once we know its origin, we can perform a reverse image search using Google Lens or another search engine like TinEye. This allows us to see if the image has been previously published and if reliable sources have confirmed its legitimacy.

5. Now, focus on the image and its details

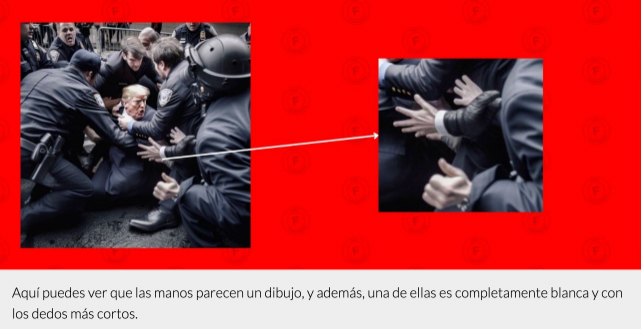

Although these types of images have a fairly realistic appearance, the technology still has some errors that we can detect. Of course, this technology is evolving rapidly, and it’s possible that these details will be perfected in a few months. Hands are a part of the human body that this software hasn’t yet perfected. That’s why it’s important to examine them closely: Do they have 5 fingers? Are all the contours clear? If they’re holding an object, are they doing so in a normal way? If we were to recreate the posture, could we hold the object, or would it fall?

This is another example, that of the “photos” of President Joe Biden supposedly dancing in the White House with Vice President Kamala Harris to “celebrate” the indictment of Trump in New York for the Stormy Daniels case:

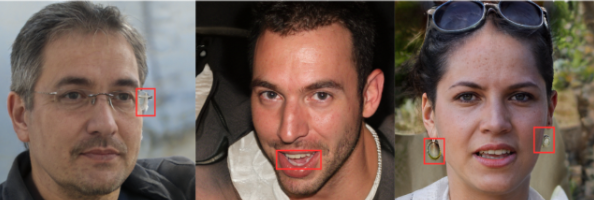

The eyes are also a key aspect. The MIT Media Lab, part of the School of Architecture and Planning at the Massachusetts Institute of Technology (MIT) in the United States, advises paying attention to this part of the body, especially if the subject of the image is wearing glasses. In this case, it is useful to analyze whether the shadows on the lenses make sense in relation to the context in which they are placed.

If the person in the image appears to have a vacant or different expression, it can also be a sign that it is an image created with artificial intelligence.

The face and skin: The texture of these areas can appear particularly artificial. Henry Ajder, a specialist in Manipulated or Artificially Generated Media, told The Washington Post, “Artificial intelligence software smooths them out too much and makes them look overly shiny.”

Ears, teeth, and hair: For example, ears positioned at different heights, uneven sizes, or unusual textures. Images can even be generated with different earrings on each ear or earrings that blend with the skin.

Teeth: Are there too many teeth, or are they shrunken, elongated, or misaligned?

Hair: It may be blended with the surroundings, overly straight, or there may be hairs stuck to the eyebrow shape.

6. Review the context of the image

To determine if an image was created by artificial intelligence, we not only need to pay attention to the subject, but also to the environment in which they are placed. If the supposed photograph was taken on a street, do the signs look correct, or are they blurry? Do they correspond to the location where the scene supposedly took place? We know that technology still exhibits errors in certain details like these.

We also have to pay special attention to the proportions of different people and objects in the image. In some cases, this technology creates compositions in which the different parts are not proportionate, and their sizes differ from what we see in real life.

6. Automatic Detection Tools

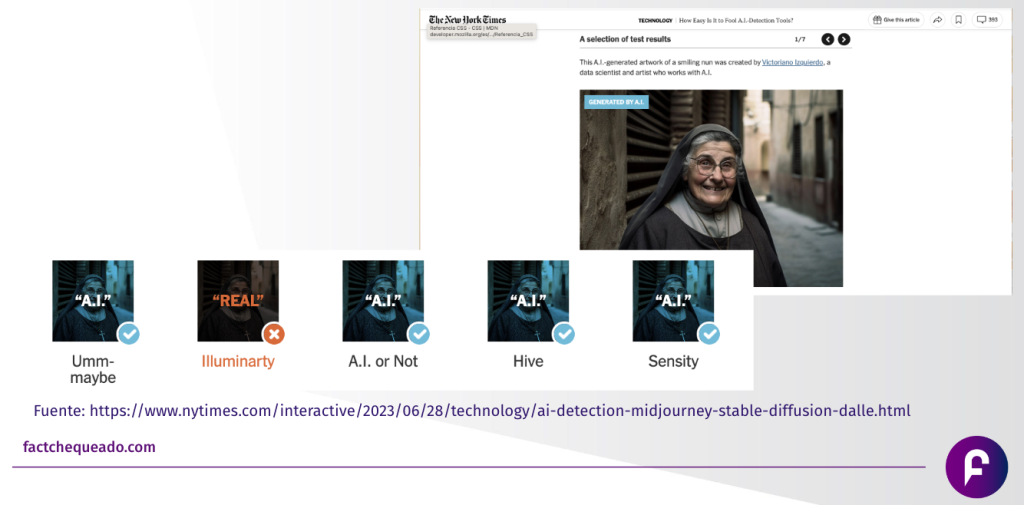

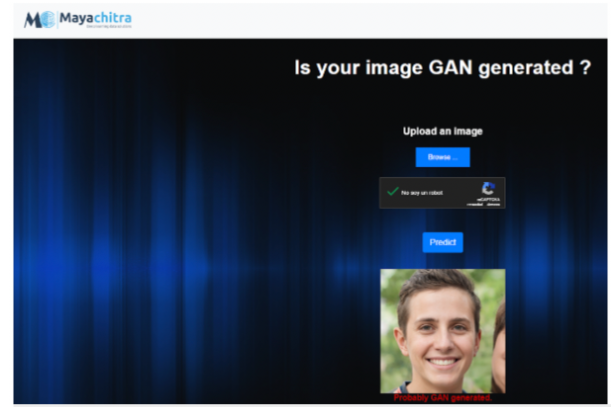

There are automatic detection tools that allow you to identify images created by artificial intelligence. However, so far, they are not 100% foolproof. Maldita.es, a founding member of Factchequeado, tested the online platform Mayachitra, which allowed them to identify images created by the web image generator This Person Doesn’t Exist.

However, they detected issues with examples from the DALL-E 2 platform. Similarly, The New York Times conducted a test – and shared findings in an article published on June 28, 2023, with 5 of these platforms that are supposed to help detect such images and found that all of them had shortcomings.

7. In the case of videos and deepfakes

To detect a deepfake, the MIT Media Lab, part of the School of Architecture and Planning at the Massachusetts Institute of Technology, advises paying attention to the face of the person who appears in the recording and asking some questions: “Does the skin appear too smooth or too wrinkled? Is the aging of the skin similar to that of the hair and eyes?” In other words, making sure to observe details is similar to what we described earlier.

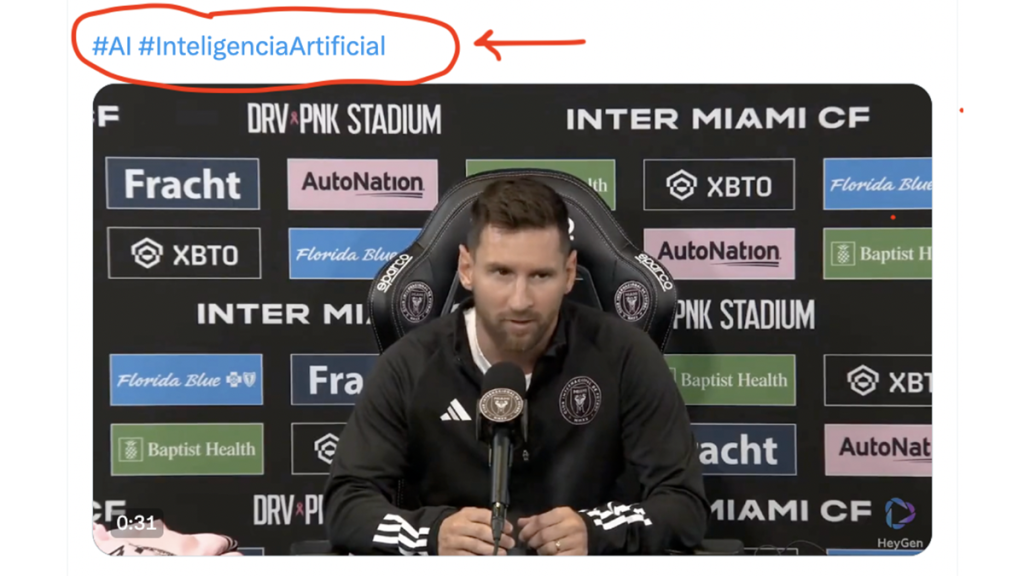

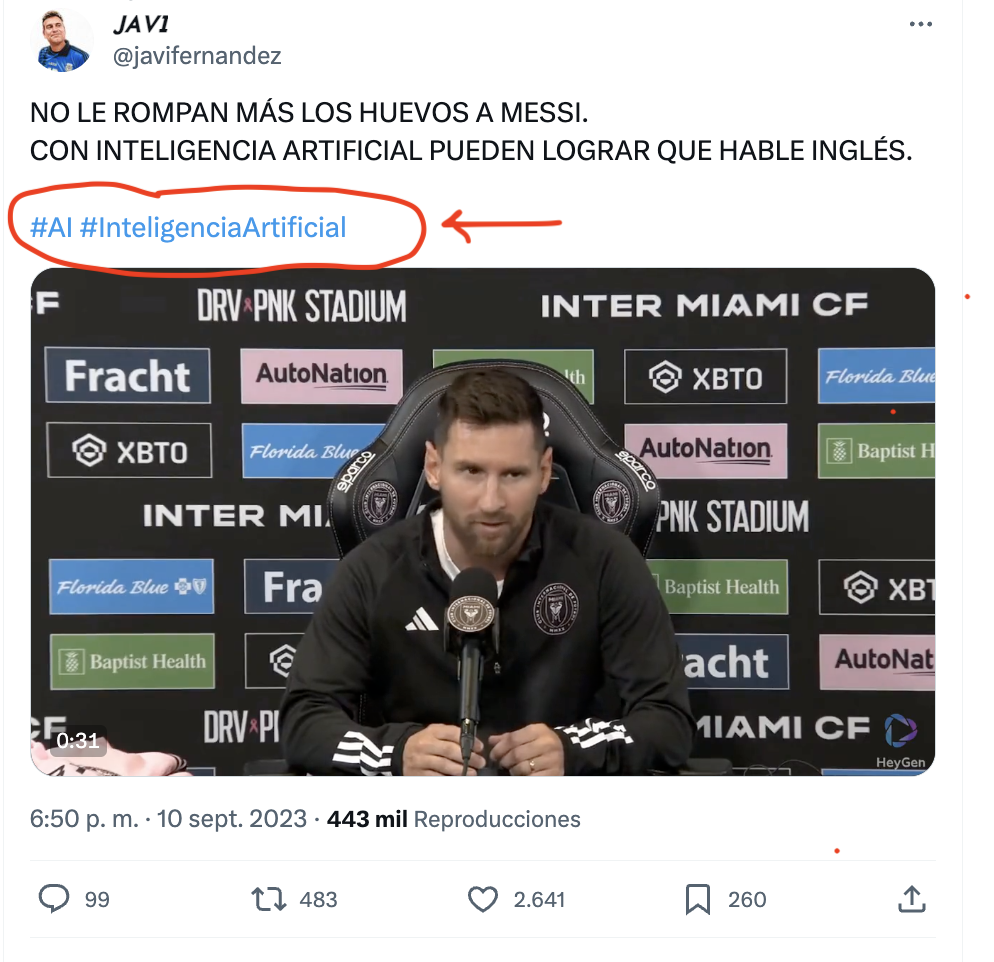

However, there are AI tools, even available on smartphones, that allow for voice translations into other languages after transcribing speeches or interviews taken from social media. This is what happened with the Argentine player from Inter Miami, Lionel Messi, whom we could see and hear speaking perfect English with good coordination between his lips and the sound.

The recording of Messi speaking in English was created by the Argentine user on X (formerly Twitter) @javifernandez using the HeyGen application, a tool that allows you to dub a video in any language while maintaining the original voice of the person. But before looking for a tool, we can conduct journalistic research that indicates:

- In the tweet, hashtags in Spanish indicate that it was created with AI: #AI #Artificialintelligence.

- A search on YouTube shows that the original video corresponds to a conference by the soccer player on August 17, 2023, the first one after joining the American team. In the actual recording, published by the official Inter Miami account, you can hear the footballer speaking in Spanish. Specifically, at the 11:35 minute mark, you can see the same sequence as the AI-generated video, but in Spanish.

- Contextual information about the individual indicates that Messi does not usually respond to interviews in English but chooses to speak in his native language, Spanish, as he has explained on several occasions.

All of this information can help you before you delve into the technical aspect of image detection. At this point, it’s worth noting that, as explained by Factchequeado, to translate these videos, several users on social media claim to have used translation platforms, voice synchronization, and synthetic voices (generated by software, capable of imitating our way of speaking).

- Rask. The company claims to be able to translate up to 130 languages with a monthly subscription.

- In June 2023, YouTube announced a partnership with the company Aloud, to offer translation for videos on the platform. Content creators like Daily Dose of Internet are already sharing their videos in multiple languages through AI-translated audios.

- HeyGen has gone a step further and also offers video translation with lip synchronization. This reinforces the credibility of the speaker we see in our chosen language, even if the person in question does not actually speak that language.

- Eleven Labs demonstrated this with a speech by actor Leo Di Caprio. It’s a tool they are testing for automatic dubbing, preserving the voice and emotions of speakers in different languages.

These are just some tools and steps to follow, but it is very likely that we will have more to share in the coming months. AI, the car coming at full speed in the opposite direction, is accelerating, and journalists cannot veer off the road. We need to keep improving our vision, rely on our experience, and activate all our senses, while keeping our hands on the wheel. Hopefully, at the same time, AI regulations will be instituted to provide clear traffic signals.

Comments